Part 2 - Beginning the Import Code

Part 1 of this series showed you how to set up the connection to CRM in preparation for importing records from a SQL Server table. The reason you may want to do this is that you have SQL server data in another system, or you are receiving files from an outside data source that reside in a data warehouse. Part 2 is about writing the code to import records as Accounts in CRM 2011.

Create Your Staging Table

Creating a staging table for holding data temporarily while importing to CRM is helpful for several reasons. First, if the schema or data in the external system you are exporting changes, you only need to fix the part that exports this data to your SQL table. The worker code that imports data to CRM stays the same, because it is looking at the staging table. Secondly, depending on where the source data is housed, your import to CRM can take significantly less time. Such scenarios include your data warehouse being on a different server than your CRM, or the source data being in the cloud. If your staging table is on the same server as CRM, your import will be faster.Notice I didn't state the same database, but the same server. In my experience, best practice includes creating a separate database for your custom tables and stored procedures, so that if there is a change to the deployed database where it needs to be dropped and rebuilt, your custom code doesn't go away with it.

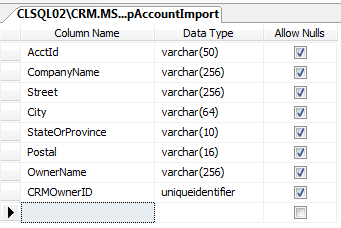

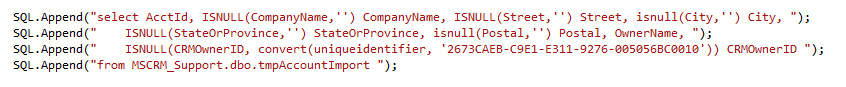

For this example we will create a new staging table in your support database. Naming conventions are personal, so feel free to use whatever names work for you. I have named my support database "MSCRM_Support" and created a staging table called "MSCRM_Support.dbo.tmpAccountImport". At the very least, create the staging table, add the following fields to it and then save the table:

|

| Figure 9 |

I am not going to get into primary keys, indexes or nulls at this point. This is a separate topic for a possible later discussion.

The AcctId should be the unique identifier from the system you are importing from. In my case, I am importing it as a varchar but it can just as easily be any other type. This as well as the other fields, with the exception of OwnerID, should be populated from your source data. The OwnerName field is meant to bring over the owner from another system. In my case, there is an owner on the Accounts in the source data.

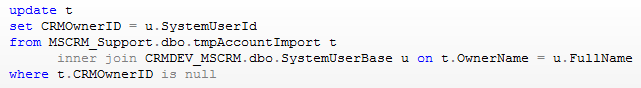

So now we need to find the CRM OwnerID that matches to your Owner name from the source record. What you need here is the SystemUserID (guid) from the SystemUserBase table, or SystemUser view. You will want to update the CRMOwnerID field in your tmpAccountImport table with this guid. Join the tmpAccountImport table with the SystemUserBase table in your main CRM database. You can join on a number of different fields, depending on what your source data looks like. Some appropriate fields could be FirstName, LastName, and FullName. Since my source data has the user's full name, we will be using that.

Here is an example of what your update query might look like:

|

| Figure 10 |

So what if you are importing some records that already have an owner, and some that do not? What I do is designate a CRM user as a default user, and let the sales people assign the Accounts amongst themselves in the CRM system. In this case, you can simply update the tmpAccountImport table with the guid for your default user, whenever something doesn't match.

Now you have your staging table ready to import to CRM.

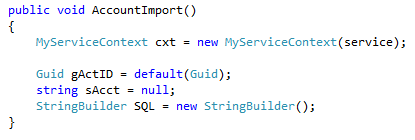

Creating the Structure for the Account Import Code

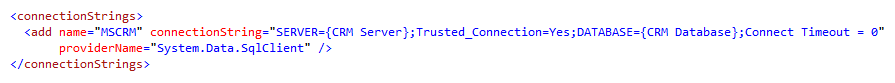

Start out by adding your connection string to the App.config file. Here's an example of what yours should look like. It should be within the |

| Figure 11 |

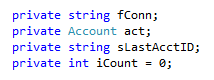

Now go back to Program.cs and add the variables from Figure 12, below. Put them just under the variables we added in Part 1 of this series. The fConn will hold our connection string. "act" is for the Account entity we are working with. sLastAcctId will hold the last Account information, in the event there is an error with the import we can tell which record broke it. iCount keeps count of the total number of records imported.

|

| Figure 12 |

|

| Figure 13 |

|

| Figure 14 |

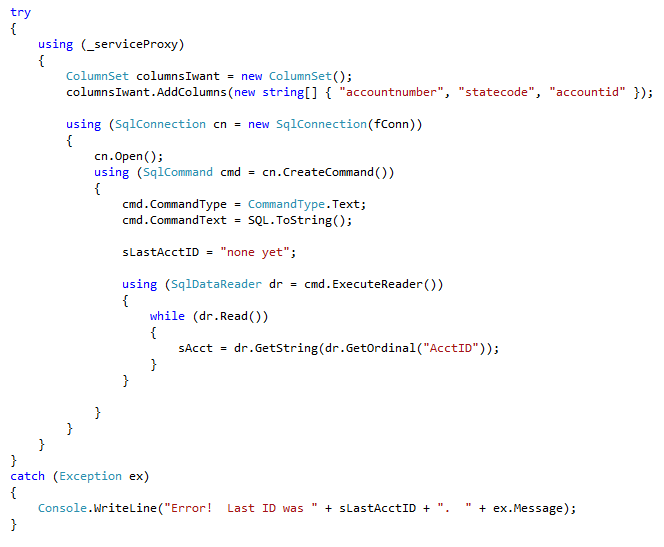

Next, add a try/catch block, connect to the _serviceProxy. Set up a new ColumnSet and add to it the names of fields that you need to see or compare in CRM. Columns are added as a string array, and the column names are the actual field names in CRM but they are case sensitive so make sure you are using actual names. Since we are importing/updating records, we only need to retrieve a few fields. Retrieving more than you need will slow down your import.

You can now add code to connect to the database and read through the records. Inside the "while" code block assign the sAcct variable the value of the "AcctId" field from the first record. . Your code should now look something like Figure 15:

|

| Figure 15 |

Part 3 of this series will finish out the import code and show how to use your connection string. Finally, we will write out the results of the import.

Related Links:

Part 1 - Getting Started

Part 3 - Finishing the Import/Update Code

Part 4 - Reconciling More than One Match - Merging Accounts

I was blown out after viewing the article which you have shared over here. So I just wanted to express my opinion on Data Science, as this is best trending medium to promote or to circulate the updates, happenings, knowledge sharing.. Aspirants & professionals are keeping a close eye on Data science course in Mumbai to equip it as their primary skill.

ReplyDeleteI feel very grateful that I read this. It is very helpful and very informative and I really learned a lot from it.

ReplyDeletemachine learning and artificial intelligence courses in bangalore

Hi! This is my first visit to your blog! We are a team of volunteers and new initiatives in the same niche. Blog gave us useful information to work. You have done an amazing job!

ReplyDeleteData Analytics Course in Mumbai

This is a wonderful article, Given so much info in it, These type of articles keeps the users interest in the website, and keep on sharing more ... good luck.

ReplyDeleteExcelR Business Analytics Course

Excellent Blog! I would like to thank for the efforts you have made in writing this post. I am hoping the same best work from you in the future as well. I wanted to thank you for this websites! Thanks for sharing. Great websites!

ReplyDeletedata analytics course in hyderabad

This is a wonderful article, Given so much info in it, These type of articles keeps the users interest in the website, and keep on sharing more ... good luck.

ReplyDeletedata analytics courses

This blog contains useful information. Thank you for deliverying this usfull blog..

ReplyDeletespoken english classes in bangalore

Spoken English Classes in Chennai

spoken english class in coimbatore

spoken english class in madurai

devops training in bangalore

DOT NET Training in Bangalore

best spoken english classes in bangalore

Spoken English in Chennai

best spoken english institute in coimbatore

best spoken english center in madurai

I really enjoy simply reading all of your weblogs. Simply wanted to inform you that you have people like me who appreciate your work. Definitely a great post. Hats off to you! The information that you have provided is very helpful.

ReplyDeletedata analytics courses

business analytics course

data science interview questions

data science course in mumbai

The information provided on the site is informative. Looking forward more such blogs. Thanks for sharing .

ReplyDeleteArtificial Inteligence course in Nashik

AI Course in Nashik

o Thank you for helping people get the information they need. Great stuff as usual. Keep up the great work!!!

ReplyDeletepmp certification course training in Guwahati

This Was An Amazing ! I Haven't Seen This Type of Blog Ever ! Thankyou For Sharing data science training in Hyderabad

ReplyDeleteI have to search sites with relevant information on given topic and provide them to teacher our opinion and the article.

ReplyDeleteSimple Linear Regression

Correlation vs covariance

KNN Algorithm

Logistic Regression explained

It's late finding this act. At least, it's a thing to be familiar with that there are such events exist. I agree with your Blog and I will be back to inspect it more in the future so please keep up your act. ExcelR Machine Learning Course

ReplyDeleteSuch a very useful article. Very interesting to read this article.I would like to thank you for the efforts you had made for writing this awesome article. data scientist courses

ReplyDeleteThis knowledge.Excellently written article, if only all bloggers offered the same level of content as you, the internet would be a much better place. Please keep it up.

ReplyDeleteartificial intelligence course in aurangabad

The information you have posted is very useful. The sites you have referred was good. Thanks for sharing.

ReplyDeletebest data science course online

I think about it is most required for making more on this get engaged

ReplyDeletedata scientist training and placement

I feel very grateful that I read this. It is very helpful and very informative and I really learned a lot from it.

ReplyDeletecyber security course

Wow, amazing post! Really engaging, thank you.

ReplyDeletedata analytics training aurangabad

Wonderful blog post. This is absolute magic from you! I have never seen a more wonderful post than this one. You've really made my day today with this. I hope you keep this up! data science course in Shimla

ReplyDeleteGood content. You write beautiful things.

ReplyDeletehacklink

mrbahis

korsan taksi

taksi

sportsbet

vbet

sportsbet

mrbahis

hacklink

It discusses setting up the connection to CRM and importing records from a SQL Server table. The author suggests creating a staging table to hold the data temporarily while importing. This can make the import process faster, especially if the source data is housed on a different server than CRM. The author also provides code examples for importing records as Accounts in CRM 2011.

ReplyDeleteyes we appreciate all the information

Data science courses in Ghana

Excellent summary!

DeleteThis guide on creating a custom data import for Microsoft CRM is incredibly helpful, especially for those working with SQL Server data. The emphasis on establishing a staging table is spot-on; it not only enhances efficiency by allowing for easier updates but also speeds up the import process significantly. I appreciate the clarity in explaining the importance of separating custom tables and procedures into their own database, as this is a crucial best practice for maintaining integrity during changes. This approach will surely benefit developers looking to streamline their CRM data imports! Looking forward to seeing the next steps in the coding process!

ReplyDeletedata analytics courses in dubai

Excellent blog. Thanks for sharing the codetech its really helpful for people with the requirement.

ReplyDeleteData Science Courses in Hauz Khas

This article does a great job of laying out the practical steps for creating a custom data import with CRM.

ReplyDeleteData science courses in Mysore

"Great continuation on creating custom data imports with the Microsoft CRM SDK! It’s a powerful tool for streamlining data integration and improving CRM system functionality."

ReplyDeleteData Science Course in Chennai

Great explanation of how to create a custom data import with the Microsoft CRM SDK! The step-by-step approach really simplifies the process for beginners. Data science course in Bangalore

ReplyDeleteCustom data imports using the Microsoft CRM SDK streamline integration, ensuring smoother CRM updates from external systems. Staging tables simplify handling changes in source schemas, making the import process robust and efficient!

ReplyDeleteData science course in Navi Mumbai

NILANJANA B

NBHUNIA8888@gmail.com

Data science course in Navi Mumbai

https://iimskills.com/data-science-courses-in-navi-mumbai/

This post provides a really helpful guide on using APIs to create custom data imports. It’s well-written and the step-by-step instructions are easy to follow. I’ll definitely keep this in mind for my future projects that require API integration. Great work!

ReplyDeleteData science courses in chennai

This article provides a detailed, step-by-step approach to setting up a custom data import into Microsoft CRM, and it's fantastic that it covers the staging table creation and the initial coding process.digital marketing courses in delhi

ReplyDelete''Creating a Custom Data Import with Microsoft CRM SDK" provides an excellent guide for streamlining data integration processes. It highlights how developers can leverage the SDK to create tailored import solutions, ensuring accuracy, efficiency, and seamless integration with Microsoft Dynamics CRM.

ReplyDeleteThank you.

digital marketing course in Kolkata fees

such a wonderful article

ReplyDeletedigital marketing agency in nagpur

Thanks for posting part 2, it was amazing and helpful!

ReplyDeleteMedical Coding Courses in Chennai

Your post offers a wealth of valuable insights and is truly engaging. I appreciate the effort you put into sharing such informative content! If you're looking for reliable cloud hosting and IT solutions, OneUp Networks provides specialized services tailored to different business needs. Learn more through the links below:

ReplyDeleteOneUp Networks

CPA Hosting

QuickBooks Hosting

QuickBooks Enterprise Hosting

Sage Hosting

Wolters Kluwer Hosting

Thomson Reuters Hosting

Thomson Reuters UltraTax CS Cloud Hosting

Fishbowl App Inventory Cloud Hosting

Cybersecurity

These links provide detailed information about cloud services and hosting solutions. Keep up the great work!

Thanks for sharing this blog! It provides an insightful guide on creating a custom data import using Microsoft CRM SDK, emphasizing staging tables for efficiency and streamlined processes. Great resource!

ReplyDeleteMedical coding courses in Delhi/

Fantastic read! I feel much more confident about tackling this topic now.

ReplyDeleteMedical Coding Courses in Chennai

loved this post! Your analysis was thoughtful and really opened my eyes to a new way of thinking." Medical Coding Courses in Delhi

ReplyDeleteExcellent insights! I truly appreciate this.

ReplyDeleteMedical Coding Courses in Delhi

Nice Article. Thanx for sharing.

ReplyDeleteMedical Coding Courses in Delhi

such a wonderful article

ReplyDeletehttps://iimskills.com/medical-coding-courses-in-hyderabad/

great explanation thnx for share

ReplyDeleteMedical Coding Courses in Delhi

Thanks for sharing this blog! It provides an insightful guide on creating a custom data import using Microsoft CRM , emphasizing staging tables for efficiency and streamlined processes. Great resource!

ReplyDeletehttps://iimskills.com/medical-coding-courses-in-bangalore/

This is a well-structured approach. https://iimskills.com/data-science-courses-in-india/

ReplyDeleteYou write beautiful things.

ReplyDeleteData Science Courses in India

This is a well-structured approach.

ReplyDeleteData Science Courses in India

Definitely recommend to anyone wanting to switch careers.

ReplyDeleteMedical Coding Courses in Delhi

Creating a custom data import with Microsoft CRM SDK enables tailored data integration into Dynamics CRM. By leveraging SDK tools and APIs, developers can automate complex data mappings, validations, and transformations. This approach enhances data accuracy, streamlines workflows, and supports seamless synchronization between external sources and CRM systems.

ReplyDeleteData Science Courses in India

This post provides a clear and practical guide for integrating external SQL data into Microsoft CRM 2011. The step-by-step approach, including the use of the CRM SDK and handling of entity mappings, is especially helpful for developers looking to streamline data imports. It's great to see such detailed documentation available for a process that can often be complex.

ReplyDeleteMedical Coding Courses in Delhi

Thanks for the detailed explanation! Creating a staging table definitely helps simplify and speed up data imports from external systems into CRM. Your tips on handling owner mapping and using a default user are really useful for practical scenarios.

ReplyDeleteMedical Coding Courses in Delhi

Great continuation of the series! I really appreciate the emphasis on using a staging table—it's such a smart approach to isolate source data changes and improve import performance. Medical Coding Courses in Kochi

ReplyDeleteExcellent continuation on custom data import using Microsoft CRM SDK—very informative!

ReplyDeleteMedical Coding Courses in Kochi

Loved the custom data import tutorial—super clear!

ReplyDeleteMedical Coding Courses in Kochi

I feel very grateful that I read this. It is very helpful and very informative and I really learned a lot from it.

ReplyDeleteMedical Coding Courses in Delhi

Always learn something new from your posts.

ReplyDeleteMedical Coding Courses in Delhi

Your guide to creating custom data imports with Apex is incredibly detailed—perfect for developers diving into Salesforce customization.

ReplyDeleteMedical Coding Courses in Delhi

I feel very grateful that I read this. Thank you for sharing.

ReplyDeleteMedical Coding Courses in Delhi

Very helpful breakdown of CRM data import using staging tables. Looking forward to Part 3!

ReplyDeleteMedical Coding Courses in Delhi

Great post! I really like the emphasis on using a staging table to separate the source data from CRM imports — it makes managing changes so much easier and improves performance, especially when the source is remote.

ReplyDeleteMedical Coding Courses in Delhi

Great continuation! Custom data imports in Microsoft CRM can be tricky, and your step-by-step guide really helps demystify the SDK process.

ReplyDeleteMedical Coding Courses in Delhi

Excellent guide! Custom data import can be complex, but your step-by-step walkthrough using the Microsoft CRM SDK simplifies the process. Thanks for sharing this valuable resource.

ReplyDeleteMedical Coding Courses in Delhi

Great technical walkthrough! For professionals looking to pivot into the healthcare domain, this is a good time to explore Medical Coding Courses in Delhi—a growing field with high demand.

ReplyDeleteThank you for shedding light on this common issue! It's frustrating when Windows Explorer search results display files that can't be opened directly. This problem often stems from indexing issues, file permissions, or corrupted system files. To resolve this, consider rebuilding the search index, ensuring proper file permissions, and running system file checks. For those interested in structured, analytics-driven learning paths—particularly in healthcare and IT sectors—this resource might also be beneficial:

ReplyDeleteMedical Coding Courses in Delhi

Thanks for the detailed explanation! Creating a staging table definitely helps simplify and speed up data imports from external systems into CRM. Your tips on handling owner mapping and using a default user are really useful for practical scenarios.

ReplyDeleteMedical Coding Courses in Delhi

The best Digital Marketing in Delhi

This post details creating a custom data import into Microsoft CRM 2011 using the SDK, focusing on staging table setup, owner mapping, and efficient coding practices for importing Account records. A practical guide for CRM developers. Do check out Medical Coding Courses in Delhi for more career opportunities.

ReplyDeleteReally appreciate the effort you put into this post! The insights are clear, helpful, and genuinely made me think. It’s always refreshing to come across content that’s both informative and engaging. Looking forward to exploring more from you!

ReplyDeletefinancial modeling courses in delhi

financial modeling courses in delhi

ReplyDeleteLoving this deep dive into custom data imports using the Microsoft CRM SDK! Part 2 really brings clarity to a process that can feel overwhelming at first. The step-by-step breakdown makes it much easier to understand how to structure the import logic and handle entity relationships effectively.

I especially appreciate the practical examples—seeing how the SDK methods are applied in real scenarios helps bridge the gap between theory and implementation. This kind of content is invaluable for developers and CRM admins looking to tailor their data workflows without relying solely on out-of-the-box tools.

Your guide to creating custom data imports using Talend is incredibly helpful. Talend can be quite powerful but also overwhelming for beginners. You managed to break down the process into digestible steps while still covering advanced topics like component configuration and error handling. This is the kind of practical tutorial that speeds up the learning curve significantly. Great resource for ETL professionals and data enthusiasts!

ReplyDeletefinancial modeling courses in delhi

Great series so far—thank you for walking through this in such a detailed, step-by-step way. The use of a staging table in a separate support database is a smart move for maintainability and flexibility, especially when you're dealing with schema changes or external systems.

ReplyDeletehttps://iimskills.com/financial-modelling-course-in-delhi/

Great step-by-step guide on creating custom data imports using the Microsoft CRM SDK!

ReplyDeleteThe detailed code examples make it easy to follow and implement.

Thanks for sharing such valuable insights for CRM developers!

financial modeling courses in delhi

It's late finding this act. At least, it's a thing to be familiar with that there are such events exist. I agree with your Blog and I will be back to inspect it more in the future so please keep up your act.

ReplyDeletefinancial modeling courses in delhi

Very helpful guide! The step-by-step approach makes working with the Microsoft CRM SDK far less intimidating, and the custom import example is practical for real-world scenarios. Great resource for CRM developers.

ReplyDeletefinancial modeling courses in delhi

This is an excellent and detailed walkthrough! I really appreciate how you break down each step, from setting up the staging table to handling CRM OwnerIDs and building the import function—makes it much easier for someone new to CRM SDK to follow.

ReplyDeletefinancial modeling courses in delhi

This walkthrough is very clear and practical. You explain each step, from creating the staging table to managing CRM OwnerIDs and building the import function. The instructions make it simple for someone new to CRM SDK to follow and apply effectively.

ReplyDeletefinancial modeling courses in delhi

This is a fantastic series for anyone who's hit the limits of the standard CRM Data Import Wizard! Microsoft Learn documentation suggests programmatic imports for large volumes, and you're showing exactly how to do it right.

ReplyDeleteVISA Management Courses in Delhi